Guest post from Yineng Zhu, Andrew Permain and Joe Searle, MA Museum Studies students working with the Archives & Special Collections team.

Yineng

Hello, I’m Yineng Zhu and I have been doing a placement with Special Collections in the Library as part of my MA in Museum Studies. My project is about the University Library’s history. There are some tasks in this project. Firstly, I need to do some research on the historical background to collect and identify the significant events and key individuals in the Library’s history. And then creating a timeline online to record the information I gathered before. Moreover, I need to prepare and install a physical exhibition which focuses on a specific theme, individuals, or key dates in the Library’s history. Meanwhile, I will learn how to carry out an oral history interview and summarize recording. Finally, I will also know more about digitization and OMEKA to digitize archives, as well as creating metadata and exhibition page.

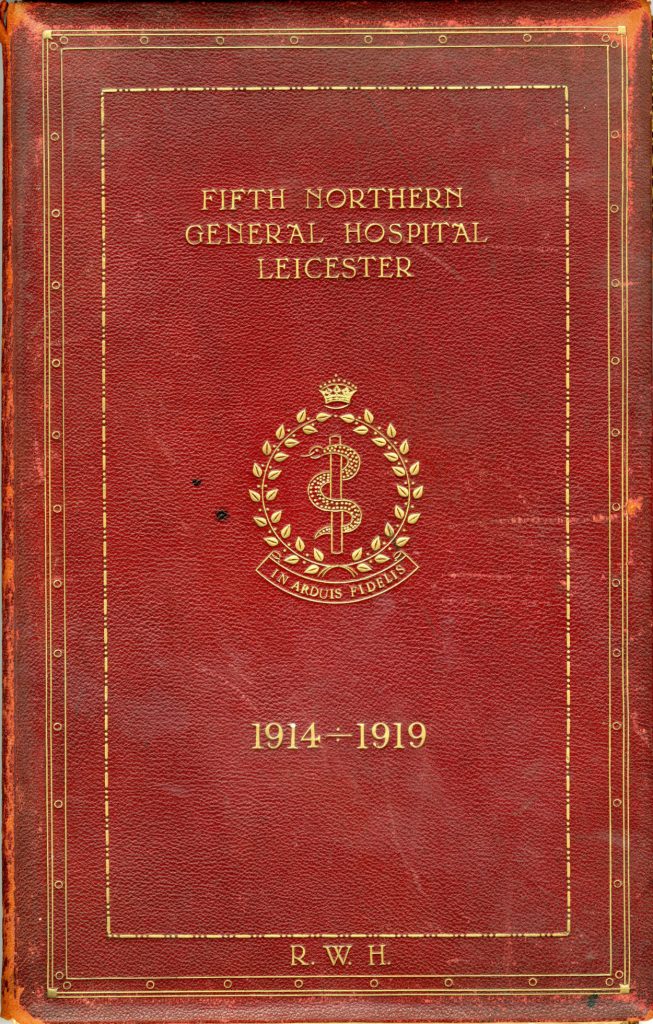

In the last two weeks, I did some literature review and made a detailed note about the University Library’s history. Recently, I began creating a timeline by using the software called Tiki Toki. In order to identify these themes convincingly, I plan to choose proper documents, such as electronic photographs, archive documents, and web links. Besides, I will divide these historical themes in decades by different colour. For example, the appointment of staff, the opening of new library buildings, different donations and so on. The image shows the timeline I start creating recently.

Andrew

The project that I have been tasked with conducting research into is the history of Fielding Johnson Building. A history of 245 years stretching back to the 19th Century and the year of our lord 1837. This grand building has seen much change since it began life as the Leicestershire and Rutland County Lunatic Asylum back in 1837. Today this grand building is still standing having survived two world wars and various changes to make it suitable to be used for the teaching of students. Over the past two weeks, I has spent many an hour siting in the reading room examining various materials that relate to this grand and significant building’s history including one book dedicated just to the history of the 5th Northern General Hospital (See image). Through a thorough read and analysis of material I have learnt much about the history of this grand building. I discovered that in the more recent additions of the University’s reports the famous Geneticist of the University Sir Alec Jeffreys is always bar one photo pictured with his DNA finger print invention. Another thing that one discovered is that the Fielding Johnson building at one time contained an Aquarium.

Finally I wish you all to remember the University’s motto particularly as it has now disappeared from the university’s logo.

Ut vitam habeant ‘so that they may have life’.

Joe

Hello, my name is Joe and I am a MA Museum Studies student currently on a work experience placement with the Special Collections team at the library of the University of Leicester. My official role for the next 8 weeks is ‘Digital Curator in Residence’ for the new Digital Reading Room – which simply means that I will be exploring different ways of presenting digitised archival content from Special Collections, using the cutting-edge technology available in the room. This blog, for the next 8 weeks, will be a record of my progress, as well as a place for me to explore my thoughts and ideas in what will most assuredly be an interesting placement.

Many Meetings

The beginning of the week was mostly spent with all the necessary introductions and formalities that accompany a new endeavour. In addition to meeting some of the Library staff (and the excellent Special Collections team), I got to meet Alex Moseley – the University’s resident expert on play and game-based learning – for a discussion about the parameters of my task and some possible approaches. This was followed by my somewhat unexpected participation in a workshop taking place in the Digital Reading Room, which was led by Ashley James Brown – a digital artist and ‘creative technologist’. The workshop had our group exploring novel input methods for digital technology by exploiting the principles of conductivity using simple circuits, and (rather surprisingly) some fruit. Though certainly entertaining, I had hoped to gain some inspiration on how the multi-touch technology in the room could be creatively used, which was sadly lacking.

A Literature Review

My main task for this first week was to review the literature on the use of multi-touch displays in educational and heritage contexts, which should help to inform my own projects going forward. To help get me started, I had a meeting with librarian Jackie Hanes, who had some useful advice for conducting literature searches. Then, it was left to me to explore the literature and present my findings, which can be found below.

Having had no previous experience in this field, (my undergraduate degree was in History), I had no prior expectations, but as someone who has always had an interest in technology, it was a fascinating exploration into a subject far beyond my usual domain. It was interesting to find that there is an entire field of study called Human-Computer Interaction (HCI), which seeks to explore and optimise the various ways in which people work with computers. In particular, there has been a large body of work exploring different methods of interaction beyond the now-standard windows, icons, menus and pointers (or WIMP, for short) on a standard computer monitor, and this is where we can find much of the research related to the use of multi-touch displays. As this technology has improved and matured, many have been interested in the possible applications of multi-touch technology, and we can now find many companies today extolling the benefits of multi-touch technology to a wide variety of clients.

If we first turn our attention to the marketing material produced by the manufacturers of multi-touch displays, we can see what kinds of experiences are being offered, and find that the message is remarkably consistent. Consider ProMultis, (the same company that supplied the technology in the Digital Reading Room), who claims that multi touch ‘allows interaction and collaboration between users’[1]. Similarly, we are told that modern multi-touch technology can transform mundane displays into ‘an engaging multi-user interactive computing interface’[2] and turn visitors ‘from passive observers to actively engaged participants’[3]. The common experience being sold seems to be an engaging and collaborative one – and we can now turn to the academic literature to assess whether these claims hold any truth.

Many researchers in the field of HCI have naturally been curious about the possible benefits of multi touch technology, especially when compared with the more traditional computer experience. One concept that has particularly gripped academics within the field of HCI is the idea of the ‘digital desk’ – a horizontal interactive display that replaces or augments the traditional office desk[4]. The term desk is important here, as it implies use in a work environment for the purposes of improving productivity. Horizontal tabletop displays have been singled out specifically as being good candidates for encouraging group collaboration[5], which is supported by research showing that horizontal displays are better at facilitating group collaboration than vertical displays[6], and that the nature of multi-touch technology itself seems to encourage user participation, which leads to more equitable participation among people in groups.[7] However, it should be noted that these studies have mainly been focused on group performance in work environments, and that when these multi-touch devices have been transferred to public settings – such as museums and galleries – the results have been less positive.[8]

Why then, do multi-touch devices struggle to create the same levels of group collaboration in public environments as can be seen in work environments? The answer lies with the different ways that these devices are used in each environment, as well as with the different expectations of potential users. In work environments for example, groups are working together with a shared purpose to solve specific problems over a long period, and these users are more likely to learn how to effectively use the technology available. In public environments however, multi-touch devices have to serve a dual-purpose – first to attract potential users to interact with the device, then to maintain their interest long enough for the device to fulfil its purpose.[9] In addition, users in public environments will have vastly different levels of digital literacy and computing experience, making it supremely difficult to create an experience that will appeal to everyone. An interesting question to ponder at this stage is whether the Digital Reading Room is a ‘public’ space or a ‘work’ space – considering that the answer has important implications for the type of content that will ultimately be successful on the technology in the room.

One crucial finding that emerges continually from the research literature is that multi-touch devices installed in public spaces strongly encourage sociality. This concept has even been given a name – the ‘honey-pot effect’ – which describes the phenomenon of an in-use multi-touch interactive inexorably drawing in other people.[10] This effect has been very well documented.[11] Although in one sense this is certainly positive – it ensures that these devices are in near-constant use in busy periods and they can often become that ‘star’ feature of an exhibition[12] – this also raises some key issues. For example, the high amount of attention directed at multi-touch devices can be off-putting for some, especially when the interactions are viewable to a large audience as many people are afraid of getting it ‘wrong’ and looking foolish.[13] This seems dependent on age, as children are often more eager to dive in and begin interacting, whereas adults are generally more cautious and prefer to watch others first before interacting themselves.[14] Another key issue is the potential for conflict. As more people begin interacting, there is always the chance that the actions of one will interfere with the actions of another – sometimes intentionally, but often by accident. Although HCI researchers have proposed various methods of dealing with this problem[15], these solutions have rarely carried over from academia into the real-world – and conflicts, if they do arise, often have to be resolved by the users themselves.

Another related finding is that many people are still attracted to the novelty of large multi-touch displays – seemingly despite our increasing familiarity with touch devices. This causes problems for designers, for although it is easy to attract attention with playful methods of interaction, it is tremendously difficult to get users to progress further towards meaningful interaction with the actual content.[16] In museums, it has been found that ‘stay-time’ – the measure of how long visitors remain at any one feature – is often higher for multi-touch tables than elsewhere.[17] But these findings are dampened somewhat with the now-common knowledge that stay-times at traditional exhibits are often shockingly low, usually less than a minute on average[18], and multi-touch displays seem to average about 2-3 minutes. Based on such numbers, it is difficult to justify the often substantial costs of procuring multi-touch displays and developing content for them. Using Whitton and Moseley’s synthesised model of engagement from the fields of education and game studies, we find that very few multi-touch interactives move beyond ‘superficial engagement’ – which is characterised by simple attention and participation in a task. What is needed is ‘deep engagement’, which is characterised by emotional engagement which fosters an intrinsic desire to continue the task.[19] This tallies well with the museum studies literature, which has found that interactive stations providing ‘real interactivity’ – such as the creation of personal content – was preferred over stations that simply had ‘flat predefined interaction’.[20]

Some of the more successful attempts at promoting this deeper engagement have come from multi-touch interactive games. One example forced three players to work together in a game about sustainable development, where each player was assigned to a resource (food, shelter or energy) and victory could only be achieved through careful balancing and management of these resources, which required negotiation and collaboration.[21] Here, we can see some of the strengths of multi-touch displays manifesting themselves, but it must be said that simply designing an engaging multi-user game is not a blueprint for success. As noted earlier, the social nature of multi-touch displays can sometimes work against the designer’s intentions, and a clear example of this can be seen in museums, where it has been found that adults may be more reluctant to use multi-touch tables after seeing children interacting with it – which suggests that it was perceived as a toy[22], and similarly, that some parents refused to let their children use a multi-touch display, believing it to be a distraction from the ‘real’ knowledge on offer at the museum.[23] Clearly, game-based interaction can be a powerful tool but it is not a panacea for the engagement problem. Indeed, one study found that they had created an experience that was too engaging – meaning that the visitors were too focused on solving the challenges set before them and had little opportunity to reflect on the deeper issues of the content as intended.[24]

Finally, a brief note on interaction. It is sometimes assumed that multi-touch interaction, or touch interaction more generally – aided by gestures such as finger scrolling and pinch-to-zoom – is somehow more ‘natural’ for users, as opposed to using windows, icons, menus and pointers. However, as Donald Norman– a respected authority on usability design – has noted, ‘natural user interfaces are not natural’.[25] Clear evidence of this truth can be seen in some studies of multi-touch displays where users, firmly entrenched in the traditional paradigm of WIMP interactions, struggle to grasp seemingly ‘natural’ interactions[26]. We therefore cannot assume that simply by mimicking real-world interactions on multi-touch displays our users will somehow automatically know how to interact with it. All of this is not to deny the utility of touch interaction or gestures – they are certainly useful tools – and, as noted earlier, often provide a captivating and ‘delightful’ experience for users.[27] Yet equally we cannot ignore decades of work on how to design good user-experiences for computers. Multi-touch interaction is still relatively new, so we must constantly be ready to experiment and find what content best fits this method of interaction, and of course, always conduct thorough testing with real users in real environments.

In summary, some care should be taken to separate the reality of multi-touch use from the hype that often surrounds it. But to end on a note of optimism, it is unlikely that we have yet seen the full potential of multi-touch technology. Now is certainly an exciting time, given the widespread proliferation of multi-touch devices and the development of software designed to easily create interactive experiences. I will be using one such software – IntuiFace – to design and develop my own interactive experiences for the technology in the Digital Reading Room and this will form the bulk of my placement for the next seven weeks. Hopefully, the insight gained from this literature review will give me a good foundation on which to build, and I am very much looking forward to exploring how multi-touch technology may best be used.

Having finished a review of the literature on the use of multi-touch displays in various contexts, it was now time to begin learning how to create these experiences for myself. To do this, I have been using ‘IntuiFace’ – a software package designed to create interactive experiences for touchscreen displays without having to write code.

My first exposure to IntuiFace began in Week 1, when I was introduced to Library staff who had previously developed content using the program. Examining their work provided a helpful starting point, but unfortunately no-one had accrued a significant amount of experience with IntuiFace (the Library staff are, after all, very busy), so it was left to me to begin teaching myself how to use it.

Thankfully, there exists a wealth of information online to help new users. I began the week by working through this material and completing the IntuiFace tutorial, which guides users through creating their first experience. Though certainly useful, this tutorial only covers a tiny fraction of what it is possible to build using IntuiFace, so the best way to learn from this point was to begin building my own prototypes.

Prototype 1 – Timeline of the First World War

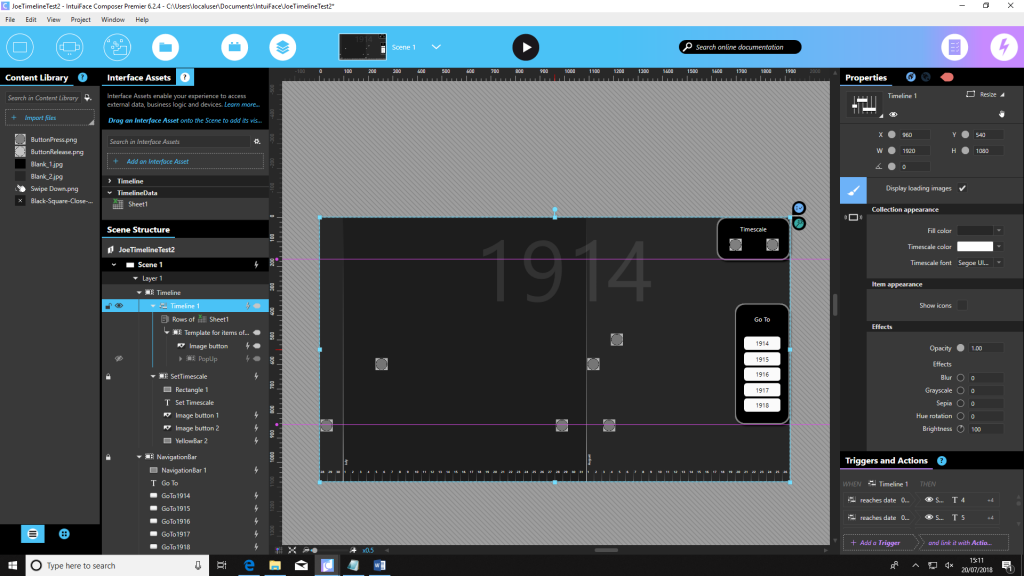

When considering what to build, I reminded myself that I needed to somehow leverage the unique advantages offered by large multi-touch displays. Bearing this in mind, I decided to develop an interactive timeline, which seemed to offer a good balance between information and interactivity. The timeline showed major events in the First World War, a subject I chose simply because the information was readily available and familiar to me, which allowed me to concentrate purely on its design. Developing this prototype helped to sharpen my skills with IntuiFace, and also served as a valuable proof-of-concept – if my final output features a timeline (which seems likely), then I can draw from my experience of building one here.

Figure 1: The Timeline Prototype viewed inside IntuiFace

Prototype 2 – Interactive Map of Leicester

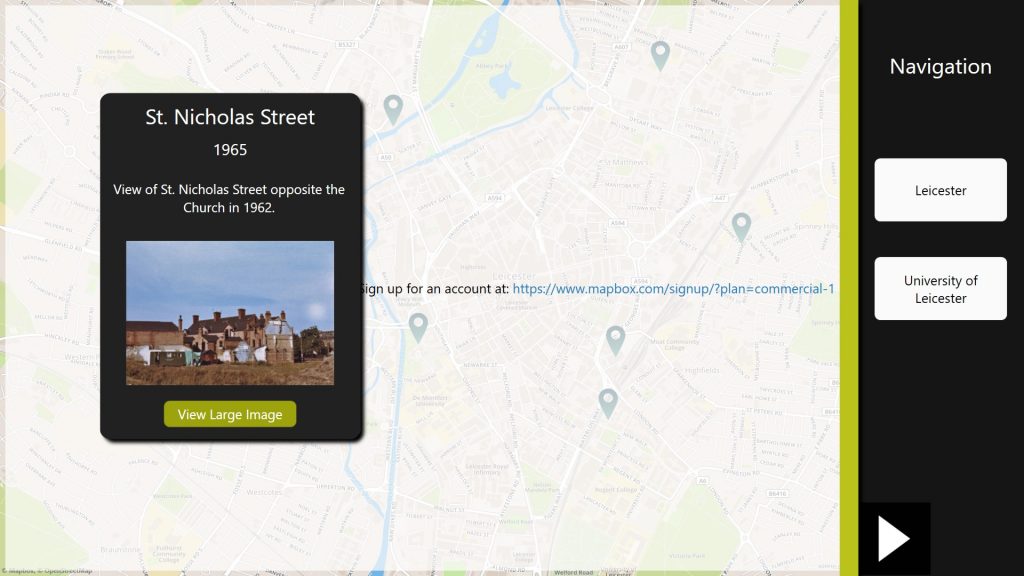

My placement requires me to showcase digitised content from the University’s Archives and Special Collections, which at the time of writing, mostly seems to feature the local history of Leicester. The main problem I am facing is that it is difficult to find ways of making this material compelling to interact with. One solution I have developed is my second prototype, an interactive map of Leicester, which uses material from the ‘Vanished Leicester’ collection – a series of photographs of streets and buildings in Leicester which have since been demolished. Thanks to a previous project, some of these photographs now have GPS data, which allows me to accurately plot their location on a map in IntuiFace. This data has already been plotted onto Google Maps using Google Fusion Tables, but the advantage of importing this into IntuiFace is that I can build much more interactivity than is possible with Google Maps. It remains to be seen if this idea will be developed further, but building it certainly required me to dig deeper into the more advanced features of IntuiFace, which will help me in my future projects.

Figure 2: The Interactive Map experience, running in IntuiFace Player

Prototype 3 – Exploring Interactivity

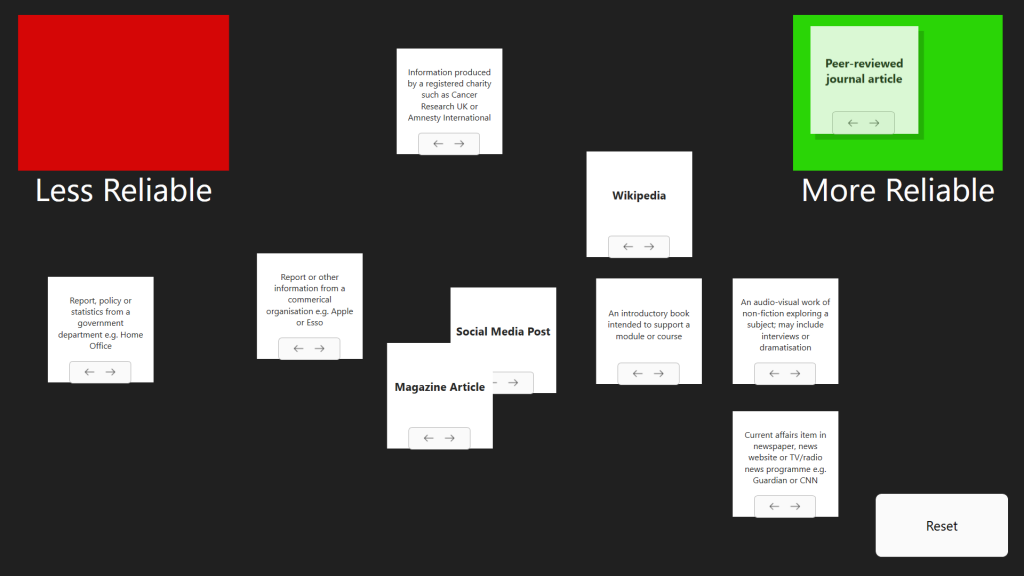

My third prototype was not a single project – rather, I thought it was valuable to experiment with various different methods of interaction using touch. This was mainly to satisfy my own curiosity – since I have never before developed content for touch devices – I wanted to get a sense of what interactions are possible. First, I decided to rebuild some previous work – the ‘Trustometer’ – and try to add more interactivity to it. This was a simple experience that had users sort various sources by their reliability. My input was to make it so that users could sort the sources into two boxes – more reliable and less reliable – with the sources disappearing when users put them in the right box. Interestingly, I remade this experience a second time when I discovered a way to build the same level of functionality in a more efficient manner.

Figure 3: My rebuilt version of the ‘Trustometer’ experience, with added interactivity

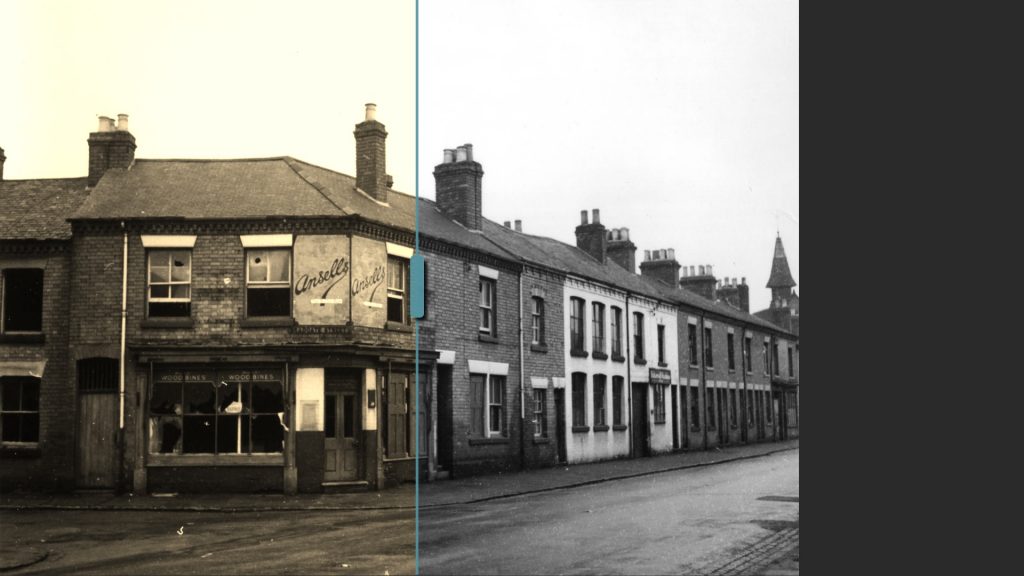

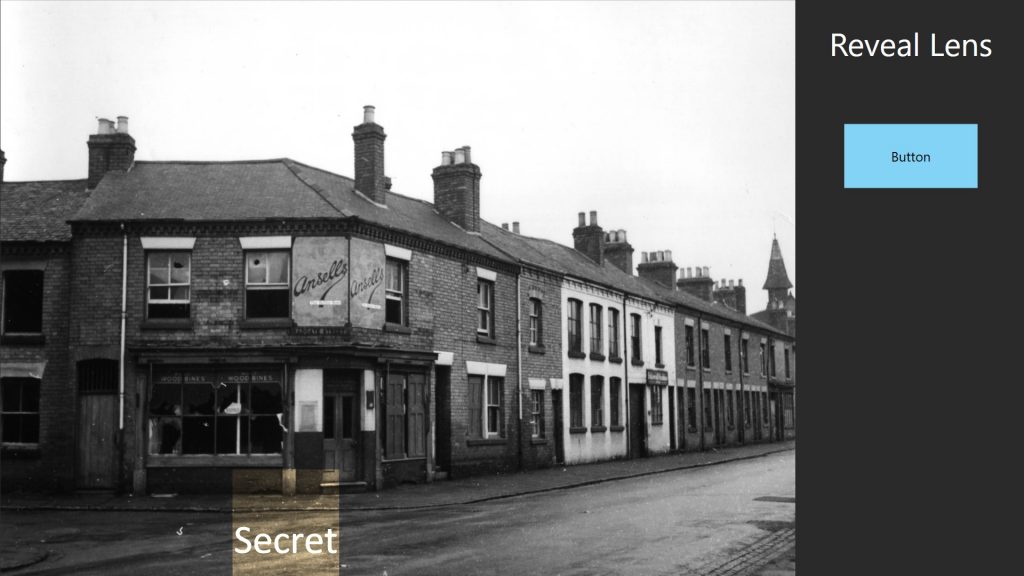

My other experiments were short attempts to achieve a specific effect. For example, I discovered ways to build parallax scrolling, a before/after image comparison that can be interacted with, and an ‘x-ray’ effect where users can drag a box which reveals hidden details in an image. These experiments have helped me to see different methods of interaction beyond simple taps and gestures, and will no doubt feature in my projects in the future.

Figure 4: Before/after image comparison with draggable slider

Figure 5: ‘X-Ray’ Image viewer

Within the past week, I have gained a significant amount of experience using IntuiFace, and now feel confident using its basic functions – and even some of its more advanced features. There is certainly more to learn, but for now, my knowledge is sufficient to build working prototypes and explore possible ideas. Next week, I will be testing how content performs on the technology in the Digital Reading Room, and see what special considerations may have to be taken, for example, when publishing content on the interactive wall versus the table. This will ultimately result in a complete prototype from which we can gain valuable information about the potential of multi-touch technology.

Subscribe to Sarah Wood's posts

Subscribe to Sarah Wood's posts

Recent Comments